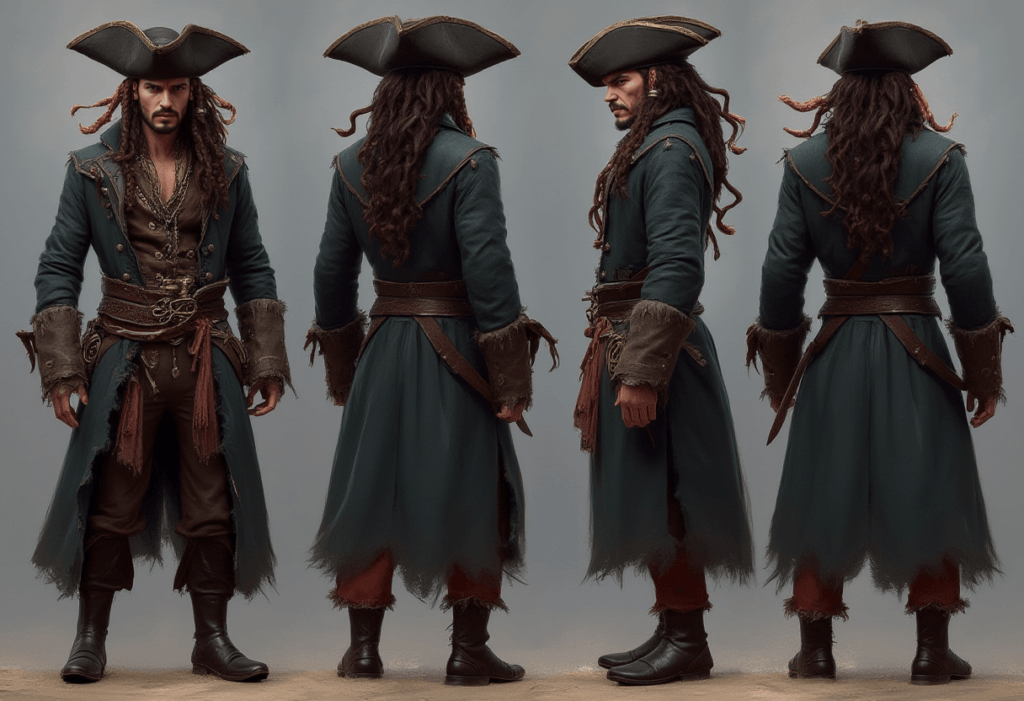

The Evolution of Character Concept Art

For nearly a decade, tools like Photoshop and Blender have been the go-to for digital artists creating character concept art. However, advancements in AI-based tools have revolutionized this space, making it possible to generate entire character sheets with just a few clicks.

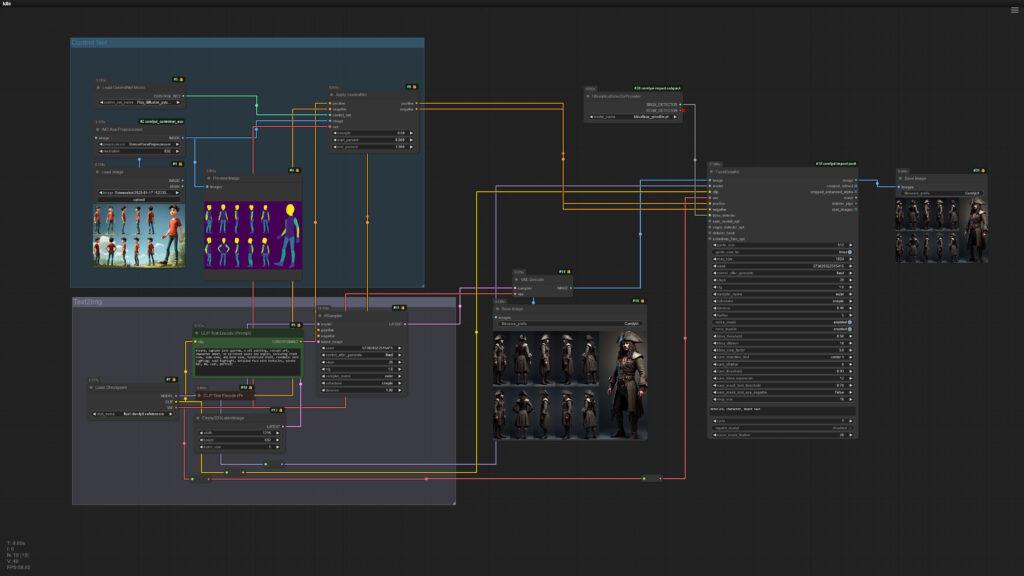

In the past, creating a single character concept required days of sketching, refining, and painting. Artists had to iterate manually, ensuring correct proportions, lighting, and textures. Now, AI allows us to generate complex character designs in a fraction of the time. Tools like ComfyUI, when combined with ControlNet, allow for fine control over poses and details, bridging the gap between AI automation and artistic intent.

Understanding ControlNet in ComfyUI

ControlNet is one of the most powerful components when working with AI-assisted character design. It allows the AI model to follow specific structural guidelines rather than generating an image based purely on a text prompt. This is especially useful for character sheets where consistent poses, perspectives, and anatomy are critical.

How ControlNet Works

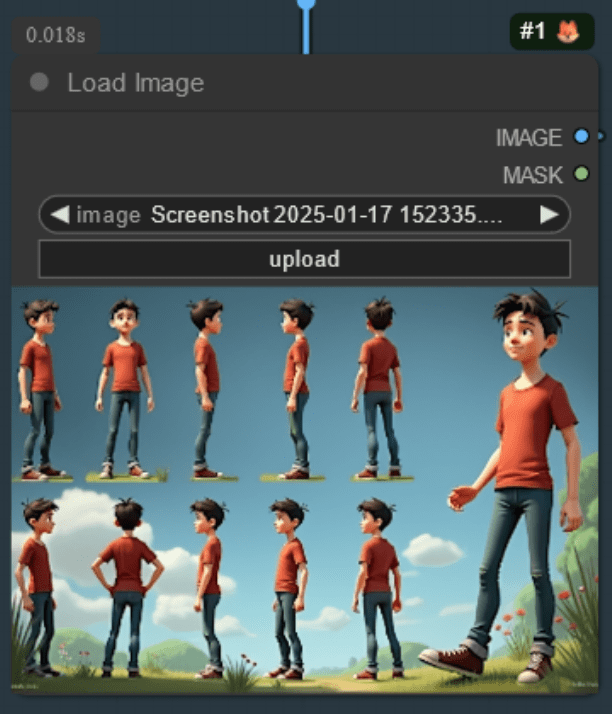

ControlNet operates by taking an input reference—such as a pose sheet, edge detection map, or depth map and ensuring that the AI generated output follows the predefined structure. In my experiment, I used a reference pose sheet (screen grabbed from GoshniiAI’s blog) to achieve consistent poses across different character views.

The general steps in the ControlNet pipeline are:

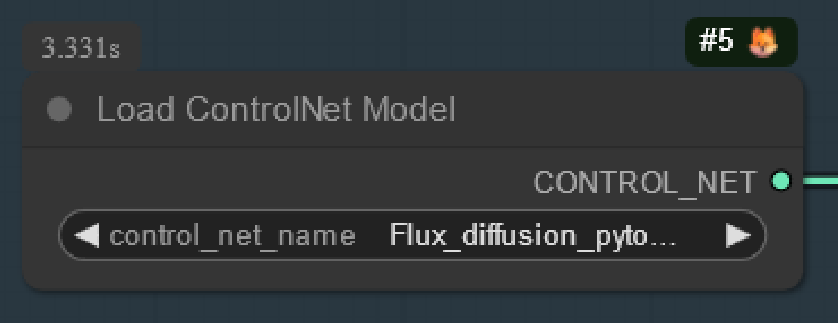

1. Load ControlNet Model

This initializes the ControlNet system within ComfyUI, defining how the AI should interpret the structural reference. For this workflow, I’m using Flux.1-dev-controlNet-union.

2. Input Reference Image

A structured pose guide (e.g., from a dataset, hand-drawn sketches, or an extracted reference from another source) is fed into ControlNet. This acts as a base point that the AI uses to generate the image.

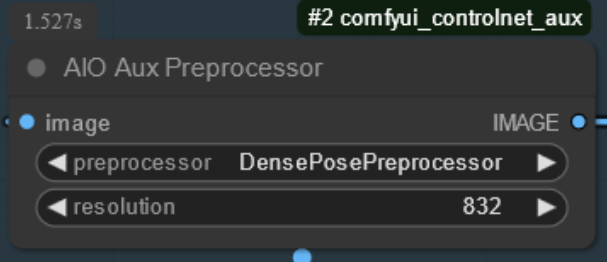

3. Fetching Data from Reference

AIO Preprocessor node allows you to select from a range of preprocessing options, each tailored to specific image processing tasks such as edge detection, depth estimation, and more.

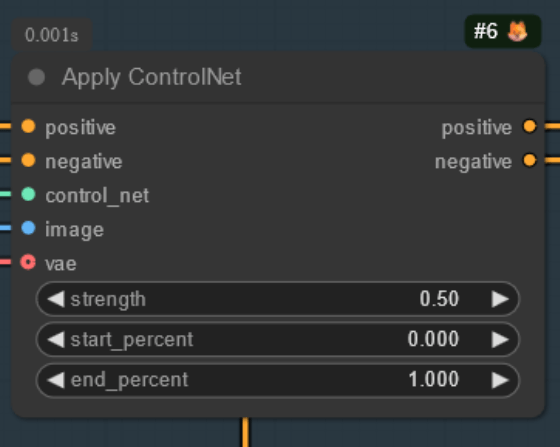

4. Apply ControlNet Constraints:

ControlNet ensures that the AI-generated character maintains the pose, proportions, and silhouette from the reference. Apply ControlNet node allows the user to fiddle with the strength of how much the output should resemble the reference.

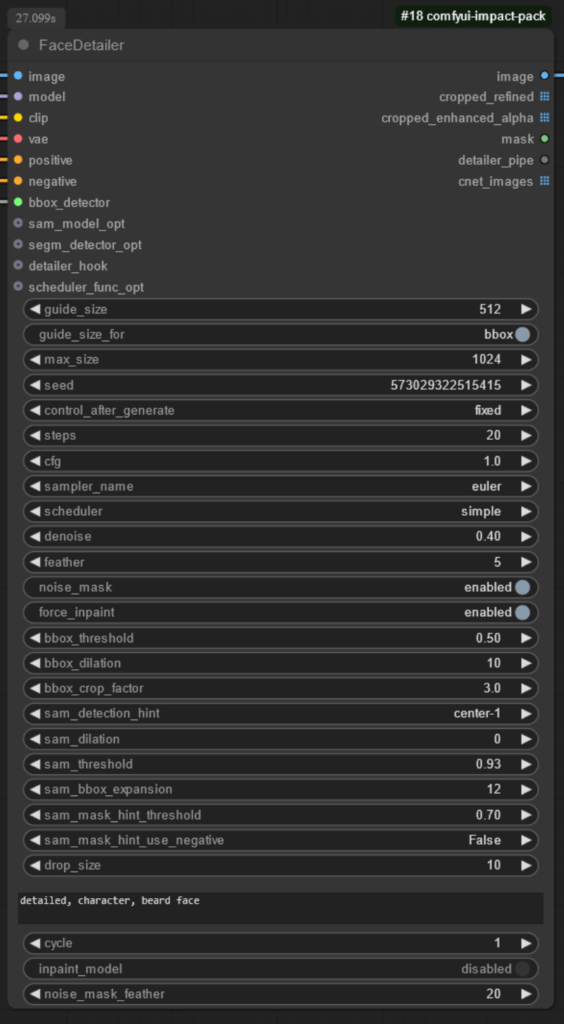

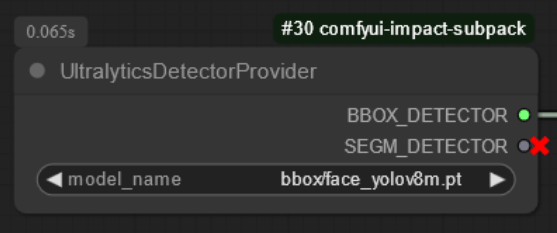

Refining the Face and Textures

With the UltralyticsDetectorProvider, I refined the facial features of our structured poses, ensuring clear character expressions without AI-induced distortions. I then upscaled the output to enhance texture quality, bringing out intricate details like clothing patterns and lighting effects. You can download the face_yolov8m model to get it started.

Final Thoughts

Generative AI has transformed how we approach character concept art. While AI tools can produce incredible results instantly, integrating systems like ControlNet in ComfyUI allows artists to retain control over structure and style. With the right balance of AI automation and manual fine-tuning, artists can create high-quality concept art efficiently. For more details, you can visit:

Goshinii AI: Comfyui consistent characters using FLUX DEV

Pixaroma: How to use SDXL ControlNet Union

omega89 at 2:08 pm, 11/07/2025 -

Pretty! This has been a really wonderful post. Many thanks for providing these details.

kumaş dikey perde at 5:07 pm, 27/07/2025 -

I’m often to blogging and i really appreciate your content. The article has actually peaks my interest. I’m going to bookmark your web site and maintain checking for brand spanking new information.

asansör perdesi at 5:35 pm, 27/07/2025 -

I like the efforts you have put in this, regards for all the great content.

asansör perdesi at 11:09 pm, 27/07/2025 -

very informative articles or reviews at this time.

website builder ai at 7:53 am, 30/07/2025 -

There is definately a lot to find out about this subject. I like all the points you made

Esther FORMULA Glutathione strips at 10:54 pm, 31/07/2025 -

Its like you read my mind You appear to know so much about this like you wrote the book in it or something I think that you can do with a few pics to drive the message home a little bit but other than that this is fantastic blog A great read Ill certainly be back